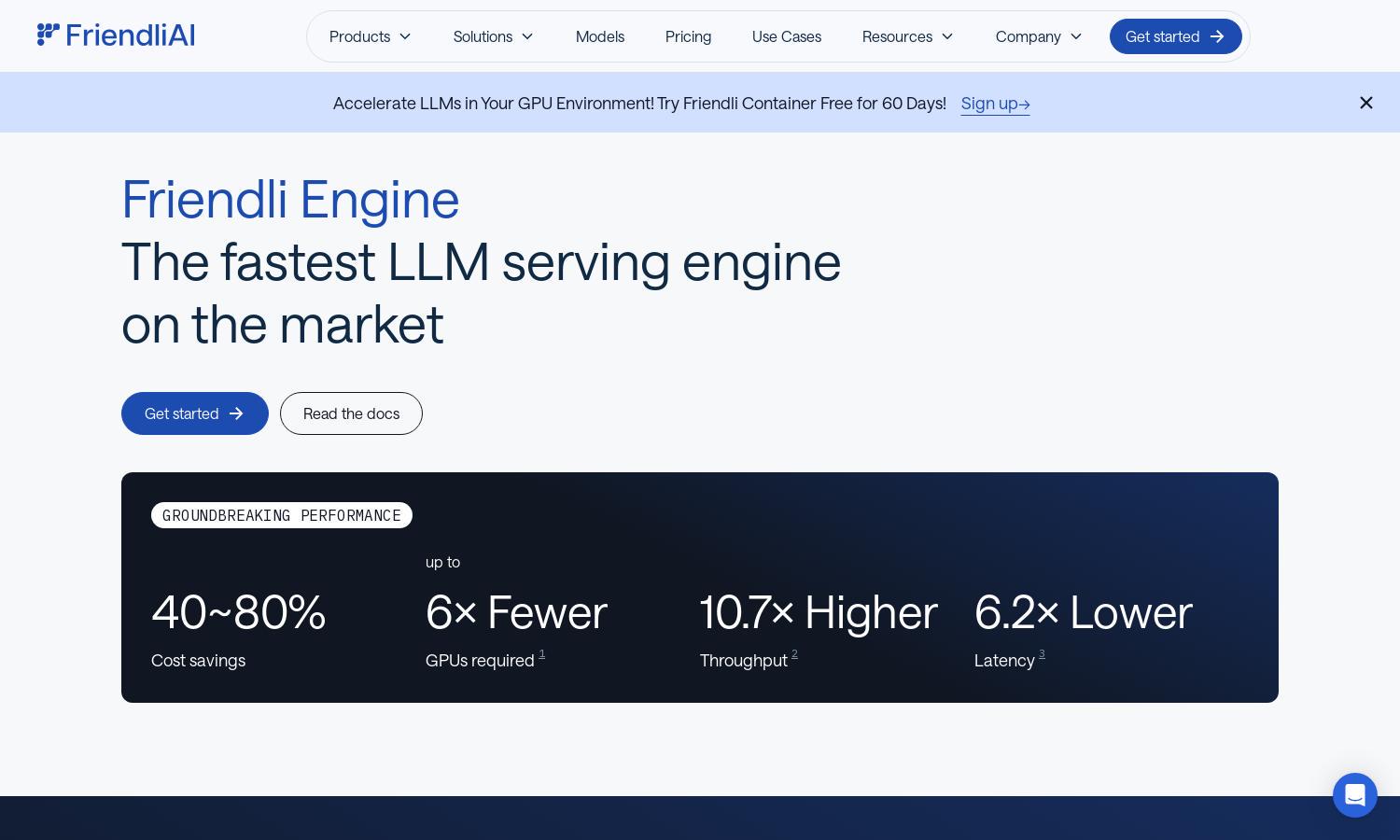

Friendli Engine

About Friendli Engine

Friendli Engine revolutionizes LLM inference by delivering unparalleled performance and cost efficiency. Designed for developers and businesses working with generative AI models, it features innovative technologies such as iteration batching and speculative decoding. This optimizes processing speed, enabling rapid model deployment and enhancing overall productivity.

Friendli Engine offers flexible pricing plans tailored for varying user needs. Each subscription tier emphasizes scalable performance, ensuring organizations can adapt as their demands grow. Users benefit from cost-efficient access to advanced LLM capabilities, while upgrading delivers enhanced features and priority support, aligning with business goals.

The user interface of Friendli Engine is intuitively designed for seamless navigation. The layout prioritizes user experience, making it easy to access advanced features and manage tasks efficiently. With responsive design elements and accessible tools, Friendli Engine facilitates effortless interaction, ensuring users achieve their AI objectives effortlessly.

How Friendli Engine works

To get started with Friendli Engine, users follow an easy onboarding process that involves creating an account and selecting their desired model. Once onboarded, they can navigate to the dashboard, where they can choose from multiple generative AI models, set configurations, and initiate LLM inference tasks. Friendli Engine optimizes performance through unique technologies, enabling rapid processing and significant cost savings, ensuring that users can easily interact with and manage their AI workloads.

Key Features for Friendli Engine

Iteration Batching Technology

Friendli Engine's iteration batching technology optimizes concurrent generation requests, achieving up to tenfold higher inference throughput. This unique feature allows users to benefit from reduced latency and enhanced performance, making it a standout capability that elevates the overall efficiency of LLM implementations.

Multi-LoRA Support

Friendli Engine enables simultaneous support for multiple LoRA models on fewer GPUs, enhancing efficiency while reducing infrastructure requirements. This key feature allows users to easily customize LLMs, ensuring that AI solutions are both accessible and cost-effective, making it a valuable asset for developers.

Speculative Decoding

Speculative decoding in Friendli Engine significantly accelerates LLM inference by allowing educated guesses on future tokens while processing the current one. This optimizes response time without compromising output accuracy, showcasing Friendli Engine's unique approach to maximizing performance for AI-driven applications.

You may also like: