Agenta

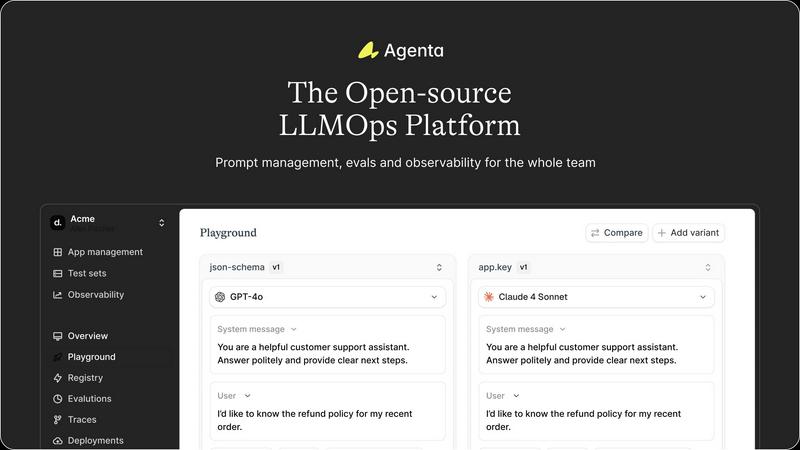

Agenta is the open-source LLMOps platform for building and managing reliable LLM applications.

Visit

About Agenta

Agenta is an open-source LLMOps platform engineered to provide AI development teams with the infrastructure necessary to build, evaluate, and deploy reliable Large Language Model (LLM) applications. It directly addresses the core challenges identified in modern AI development: the inherent unpredictability of LLMs and the lack of structured, collaborative processes that lead to scattered prompts, siloed teams, and deployment without validation. Agenta serves as a centralized hub where developers, product managers, and subject matter experts can converge to experiment with prompts and models, run systematic evaluations, and debug production issues using real data. Its primary value proposition lies in transforming ad-hoc, guesswork-driven workflows into evidence-based, repeatable LLMOps best practices. By integrating prompt management, automated evaluation, and comprehensive observability into a single platform, Agenta enables teams to iterate faster, validate changes thoroughly, and maintain visibility into system performance, thereby significantly reducing the risk and time-to-production for LLM-powered features.

Features of Agenta

Unified Playground for Experimentation

Agenta provides a centralized playground where teams can compare different prompts, parameters, and foundation models from various providers side-by-side in real-time. This model-agnostic approach prevents vendor lock-in and allows for objective comparison. Crucially, it integrates with production data, enabling developers to debug issues by saving problematic traces directly into test sets for immediate experimentation and iteration, closing the feedback loop between development and production.

Automated and Integrated Evaluation Framework

The platform replaces subjective "vibe testing" with a systematic, automated evaluation process. Teams can integrate a variety of evaluators, including LLM-as-a-judge setups, built-in metrics, or custom code. A key differentiator is the ability to evaluate full execution traces, assessing each intermediate step in an agent's reasoning chain, not just the final output. This allows for precise identification of failure points and performance regressions, ensuring changes are validated with concrete evidence before deployment.

Comprehensive Observability and Tracing

Agenta offers deep observability by tracing every LLM request end-to-end. This capability allows teams to pinpoint the exact failure points within complex chains or agentic workflows. Any production trace can be instantly annotated by team members or end-users and converted into a test case with a single click. Furthermore, the platform supports live, online evaluations in production to continuously monitor performance and proactively detect regressions.

Collaborative Workflow for Cross-Functional Teams

Agenta breaks down silos by providing tools for the entire team. It features a secure UI that allows domain experts and product managers to safely edit prompts, run experiments, and compare evaluation results without writing code. This democratizes the development process while maintaining governance. The platform ensures parity between its API and UI, enabling seamless integration of programmatic workflows with collaborative interfaces in one central hub.

Use Cases of Agenta

Rapid Prototyping and A/B Testing of LLM Features

Development teams can use Agenta's playground to rapidly prototype different prompt strategies and model combinations for a new feature, such as a customer support chatbot or a content summarizer. They can run concurrent A/B tests, track version history of each prompt, and use automated evaluations to quantitatively determine which configuration performs best before any code is committed to production.

Production Debugging and Root Cause Analysis

When an LLM application exhibits unexpected behavior in production, engineers can use Agenta's observability traces to drill down into specific user sessions. They can identify whether the error originated from a prompt misunderstanding, a faulty API call, or poor model reasoning. The offending trace can be saved directly to a test set and debugged in the playground, turning production incidents into actionable improvements.

Governance and Compliance for Regulated Industries

In sectors like finance or healthcare, where AI outputs must be accurate and auditable, Agenta provides the necessary framework. Teams can establish a rigorous evaluation process using custom, domain-specific evaluators. Every prompt change is versioned, and its impact is validated against a comprehensive test suite. The audit trail of experiments, evaluations, and approvals ensures compliance and responsible AI governance.

Enabling Subject Matter Expert Contribution

A company building a legal research assistant can leverage Agenta to involve its lawyers directly in the AI development loop. Lawyers can use the no-code UI to tweak prompts for better legal terminology, evaluate the model's responses for accuracy, and provide human feedback on traces. This collaboration ensures the final product is both technically sound and domain-accurate, accelerating development.

Frequently Asked Questions

Is Agenta truly open-source?

Yes, Agenta is a fully open-source platform. The complete source code is available on GitHub, allowing users to inspect, modify, and deploy the software independently. This model ensures transparency, avoids vendor lock-in, and enables the community to contribute to its development, as evidenced by its hundreds of GitHub stars.

How does Agenta integrate with existing AI stacks?

Agenta is designed for seamless integration. It is compatible with popular LLM frameworks like LangChain and LlamaIndex and supports models from any provider, including OpenAI, Anthropic, and open-source models. This model-agnostic design allows teams to incorporate Agenta into their existing workflow without disrupting their current tools or model choices.

Can non-technical team members really use Agenta effectively?

Absolutely. A core feature of Agenta is its collaborative UI built for cross-functional teams. Product managers and domain experts can access a secure interface to experiment with prompts, configure evaluations, and review results without needing to write or understand code. This democratizes the iteration process and aligns technical and business stakeholders.

What is the difference between offline and online (live) evaluations?

Offline evaluations are run on static test datasets to validate changes before deployment. Agenta also supports online evaluations, which are live assessments run on real user traffic in production. This allows for continuous monitoring of key performance indicators, enabling teams to detect regressions or drift in model behavior as soon as they occur with actual user data.

You may also like:

Anti Tempmail

Transparent email intelligence verification API for Product, Growth, and Risk teams

My Deepseek API

Affordable, Reliable, Flexible - Deepseek API for All Your Needs